How To Make Fossils Productive Again

30 Apr 2016

At NorCal Database Day 2016, I served on a panel titled 40+ Years of Database Research: Do We Have an Identity Crisis? What follows is a loose transcript of my talk, which I enjoyed writing and delivering and which I hope you enjoy reading.

The title of my short talk today is “How Can We Make Fossils Productive Again?’’ and the central question of this panel is “Does the Database Community Have an Identity Crisis?’’

I say: “Sure! We’ve got a crisis. We’ve got an identity crisis. We’ve got a mid-life crisis. Our community is in all sorts of crises.”

At the heart of our crises is the fact that this is literally the golden age for data. Things have never been better for data. Everyone wants a piece of data. Data is all over Silicon Valley, and Big Data, and Machine Learning, and all of these awesome tools, and yet much (or even maybe most) of the greatest, cutting-edge data-related research is not happening in the core database community. Much of the highest-impact data-related research appears in venues like OSDI and SOSP or NIPS and ICML. It’s like we’ve somehow lost control and are suddenly no longer the kings and queens of data. We’ve missed out on producing much of the seminal (and earliest) research in fields including Big Data, MapReduce and Hadoop, NoSQL, Cloud Computing, Graphs, Deep Neural Networks, and “Big ML.”

(It’s not like these results are just coming out of the machine learning or statistics communities; they’re coming out of adjacent communities such as “systems.”)

So, what’s up? Why are we in crisis?

Let’s consider a brief history of database research:

Back in the 1970s, we were the cool kids! Codd came out with his relational model, and the earliest database researchers got super excited and said: “we’re going to run behind this crazy idea.” Back in the 1970s, we were the revolutionaries! Remember that the relational model wasn’t always the way to do data management. System R was a batshit project where someone said “we’re going to take this theory paper and throw 25+ people behind it and build an actual system” and along the way at least two Turing awards and a number of groundbreaking concepts came out of it. Today, it’s easy to take this crazy bet for granted, but remember: we went all in on a really cutting-edge, guerilla research agenda, and it paid off.

Today, it’s 46 years later, and we have four Turing awards, many hundreds of billions of dollars of revenue, and tons of wonderful research to show for our big bet. However, despite these many successes, as this panel’s abstract suggests, we’re also at risk of ossification, at risk of becoming reactionary fossils. The community narrative often runs along the lines of: “What is this ‘new’ stuff? What is this ‘NoSQL/Big Data/etc.’? This doesn’t look like a relational database or like conventional database research! This doesn’t fit my idea of a ‘database’!” In fact, many amazing ideas explored in database research over the last fifteen years were largely ignored, were of the form: “here’s a great idea from [some interesting field like signal processing], but I have to cram it inside of SQL!” Or, alternatively, we say: “Bah! We did this in the 1980s. Strong reject”; and then, just a few years later: “Oh wow, industry cares about this problem and it turns out we overlooked a critical difference from the past. Let’s start publishing!”

So, as the panel abstract suggests, we may in fact be at risk of becoming reactionary fossils…

…which brings us to the title of my talk: how do we make fossils productive again?

The answer is simple: set them on fire and use them as fuel.

We take the great ideas this community has developed and leverage them to push onward, upward, forward.

How exactly are we going to use these fossils as fuel?

I believe our role as a community is to discover and define the future standards for how data-intensive platforms and tools should look and operate.

No other community has greater claim to data. We own the core idioms for reasoning about and dealing with data. We invented (or were instrumental in developing) critical concepts, including declarativity, query processing, materialized views, transaction processing, data storage, consistency, and scalability.

As a result, we deserve and owe it to ourselves to bring these concepts to new domains, applications, and platforms. It’s time to reclaim our roots as systems revolutionaries, powered by the principles underlying our historical successes.

I’m not going to tell you what to work on (and, in fact, I already spoke earlier today about what I think you should work on next: analytic monitoring). Instead, as a young, irreverent professor (or, rather, professor-to-be-in-32-hours), I’ll offer four pieces of advice:

-

Kill the reference architecture and rethink our conception of “database.” The article titled “Architecture of Database System” should be considered harmful. If a system doesn’t have a buffer pool, it can still be a database, and, in fact, I’d prefer not to read any more papers on “databases” that have buffer pools. Instead, I’d prefer you shock me with your radical, new (and useful!) conception of a data management platform.

-

Solve new, emerging, real problems outside traditional relational database systems. We’re in Northern California. This is NorCal DB Day. As far as I’m concerned, this is the center of the universe for data. If you go talk to real users or go and talk to people outside CS working at universities, it’s astounding to see the number of data-related tools that have yet to be written that don’t look anything like existing database engines. [This advice also holds for just about anyone with an Internet connection.]

-

Use data-intensive tools, both the tools that you’re building and the tools that others have built. Most data-intensive tools are awful to use. NoSQL and Hadoop partly came out of the fact that setting up data infrastructure sucks. It’s often impossible to load data if you don’t know your schema, which sucks. Data warehouses also cost a lot of money, which sucks. Treat these things that suck as inspiration. The world isn’t just some giant tire fire; rather, the world is waiting to be filled with beautiful data management tools that you can design, build, and work to understand in your research.

-

Do bold, weird, and hard projects and actually follow through. Just publishing a paper isn’t enough. Today, our prerogative as researchers goes beyond simply publishing one paper, or some CIDR vision paper that remains a vision unrealized. As a community, we have an opportunity to build real projects and products—especially via open source—that take on lives of their own beyond the basics of research and can also inform our research agenda going forward. Let’s recognize that opportunity and the encourage the ambition it requires.

There are a number of recent projects that illustrate this advice. Three examples:

-

Peter Alvaro’s work on Molly and Lineage-Driven Fault Injection takes classic techniques from data provenance and applies them to understanding and debugging distributed systems and distributed systems failures. This research isn’t just a SIGMOD paper; Peter recently gave a keynote in London about a month ago with his industrial collaborator describing how they productionized this work at Netflix, where this work finds real bugs. Theory + practice, applied.

-

Chris Ré’s work on DeepDive illustrates how to build dark data extraction engines that match or exceed human quality. Like MacroBase, DeepDive is a new kind of data processing system that’s providing value by leveraging core concepts from databases such as probabilistic inference and weakly-consistent query processing. And it works on real problems, like combating human trafficking.

-

A recent project I wish the database community had done is TensorFlow at Google, which is a distributed, scale-out Torch/Theano/Caffe-like model training framework. This is work that I think we should have done in our community. [Theo interrupts and says Chris’s group is solving this problem. Since Chris is a genius, I believe Theo.]

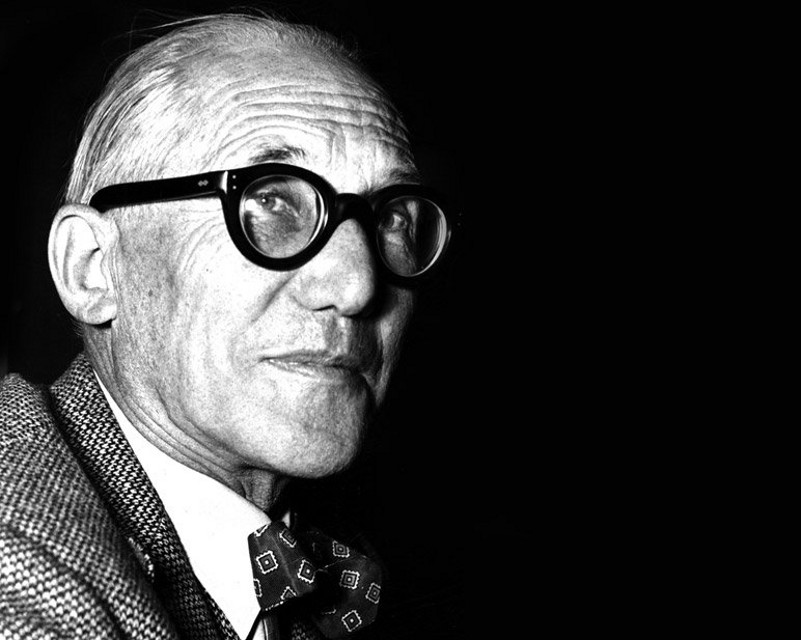

Before I end, does anyone know who this person is?

[No one knows.]

This is one of my role models. This is Le Corbusier, a great architect who pioneered many of the ideas and designs in modern architecture that we know and love today.

In the early 1920s, Le Corbusier and friends faced a similar problem to the one the database community faces today. Le Corbusier and friends felt that the practice of architecture had ossified and had become stifled by tradition, that the field was essentially dead.

In response, Le Corbusier wrote a beautiful book that translates to Towards A New Architecture. Le Corbusier wrote, in effect, that “yes, the Parthenon is perhaps the most beautiful instance, the perfect example of a particular standard of architecture. The Parthenon may have achieved the platonic ideal of the standard of architecture we’ve previously established. But there are many possible standards to acknowledge, each dependent on need and use. Standards are established by experiment.”

To reiterate, and to quote directly, Le Corbusier showed us that “a standard is definitely established by experiment.” Le Corbusier went on to revolutionize our conceptions of architecture and design. Le Corbusier wasn’t always right, but he catalyzed the field.

Today, relational database engines and the status quo in data management reflect an impressive standard. But there are many possible standards, suitable for different needs and uses. Today, it’s our time to experiment, to establish new standards.

My name is Peter Bailis, and I’m putting my shoulder to the wheel.

Read More

- You Can Do Research Too (24 Apr 2016)

- Lean Research (20 Feb 2016)

- I Loved Graduate School (01 Jan 2016)

- NSF Graduate Research Fellowship: N=1 Materials for Systems Research (03 Sep 2015)

- Worst-Case Distributed Systems Design (03 Feb 2015)

- When Does Consistency Require Coordination? (12 Nov 2014)

- Data Integrity and Problems of Scope (20 Oct 2014)

- Linearizability versus Serializability (24 Sep 2014)

- MSR Silicon Valley Systems Projects I Have Loved (19 Sep 2014)

- Understanding Weak Isolation Is a Serious Problem (16 Sep 2014)